Ever since I came across Kirkpatrick’s framework for evaluating training, I felt it was good common sense – nothing as practical as a good framework! Indeed, such good common sense that there are others who should be given some of the credit.

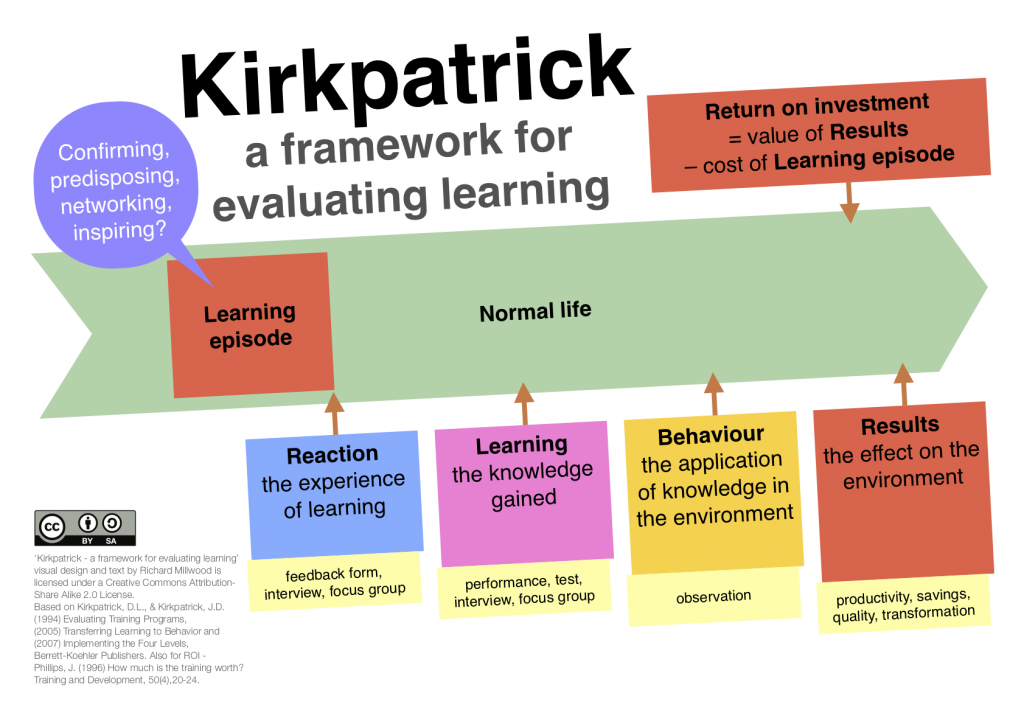

Nevertheless, I was disquieted by the notion of ‘levels’ being applied to the original four categories – it seemed to me, that like Bloom’s taxonomy, it made too much of some kind of progression or value. But the reason for this, is that evaluation is conceived as the employer’s business to decide whether the training was any good, so the later ‘levels’, such as Behaviour and Results are considered of higher value than Reaction and Learning. Kirkpatrick’s insight was to see that many evaluations stopped at Reaction and failed to see the need for further work. I feel that Learning, Behaviour and Results naturally occur later, so I have made this diagram which places them as moments along a time continuum. This then guides the researcher to know when to look at which kind of impact the learning episode had, and I make a few suggestions for the kind of methods that might be employed, but these are not exclusive.

Phillips came along and reinforced the employer’s perspective by adding Return on Investment, making it clear that we should recognise that training costs money, and that the benefits ought to be contrasted with this cost.

I have no quarrel with Phillips, but feel that the educational researcher may not be so driven by such single perspective stances as ‘benefit to the firm’, but also by the other aspects the learner may feel: of fulfilment and stimulus, and even having a moment to reflect. Without leaving the paymaster, we can still include such ideas as Confirming (that you are competent), Predisposition (towards further learning), Networking (to build a personal learning network), and even Inspiration, thus building a kind of learning capital in your workers! These are my additions to this common sense and useful framework and you can download a printable poster here.

There’s been debate about Phillips’ Return on Ivestment of Training/Learning as so many other factors, such as marketing/advertising programs, sales force incentives, more efficient production/service processes, etc., that come into play when attempting to determine the contribution of Training/Learning, except perhaps when all other factors have been held constant.

There’s also the issue of transfer of learning to practice/behaviour. It has been found that while 80% may be able to repeat what they’ve earned in a post session test, only about 20% manage to make the transfer. Post learning support, from mentors, coaches or work colleagues is therefor necessary to enable the translation and can move the success rate to 80%. But is that time and effort factored in when determining RoI?

So overall I think you do well to keep clear of the entangling RoI net!

Thanks Bill, much appreciated comments. I see Kirkpatrick’s and Phillip’s ideas as a useful framework for thinking about testing the effectiveness of a learning intervention. It simply is common sense at one level – a heuristic for thinking about why, what and when I might ask questions to evaluate. It’s precision as a ‘scientific’ model suffers from many different complexities, but its usefulness, in challenging the naievity of simple ‘reaction’ evaluation and offering other areas to consider, is in my view fantastic.